Instalación de paquetes # install.packages("keras")

# devtools::install_github("rstudio/keras")

library(keras)

install_keras()

use_session_with_seed(1,disable_parallel_cpu = FALSE)

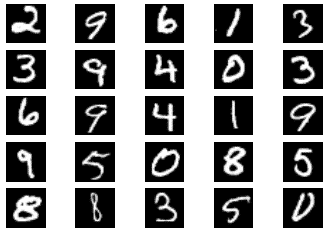

Generación del conjunto de entrenamiento # Cargar de conjunto de entrenamiento de imágenes numéricas

mnist <- dataset_mnist()

x_train <- mnist$train$x

y_train <- mnist$train$y

x_test <- mnist$test$x

y_test <- mnist$test$y Datos del MNIST

# Cambiar la forma de 28x28 a un vector de 784x1

x_train <- array_reshape(x_train, c(nrow(x_train), 784))

x_test <- array_reshape(x_test, c(nrow(x_test), 784))

# Re-escalar

x_train <- x_train / 255

x_test <- x_test / 255 # Crear las categorías de a que número pertenece cada imagen 0-9

y_train <- to_categorical(y_train, 10)

y_test <- to_categorical(y_test, 10)

Definir el modelo # Definir el modelo

model <- keras_model_sequential()

# Definir las capas de convolución (dense) y las de polling (dropout)

model %>%

layer_dense(units = 256, activation = 'relu', input_shape = c(784)) %>%

layer_dropout(rate = 0.4) %>%

layer_dense(units = 128, activation = 'relu') %>%

layer_dropout(rate = 0.3) %>%

layer_dense(units = 10, activation = 'softmax') # Visualizar el modelo definido

summary(model) _____________________________________________________________________

Layer (type) Output Shape Param #

=====================================================================

dense_8 (Dense) (None, 256) 200960

_____________________________________________________________________

dropout_3 (Dropout) (None, 256) 0

_____________________________________________________________________

dense_9 (Dense) (None, 128) 32896

_____________________________________________________________________

dropout_4 (Dropout) (None, 128) 0

_____________________________________________________________________

dense_10 (Dense) (None, 10) 1290

=====================================================================

Total params: 235,146

Trainable params: 235,146

Non-trainable params: 0

Compilar el modelo # Ejecutar la red neuronal

model %>% compile(

loss = 'categorical_crossentropy',

optimizer = optimizer_rmsprop(),

metrics = c('accuracy')

)

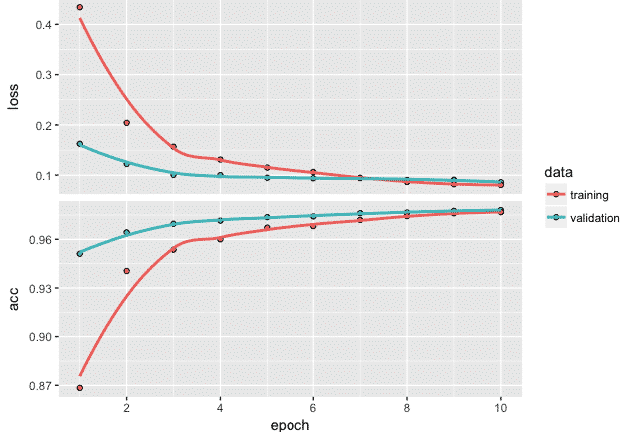

Entrenar el modelo fit<- model %>% fit(

x_train, y_train,

epochs = 10, batch_size = 128,

validation_split = 0.2

)

plot(fit)

Train on 48000 samples, validate on 12000 samples

Epoch 1/10

48000/48000 [==============================] - 4s 75us/step - loss: 0.4323 - acc: 0.8681 - val_loss: 0.1643 - val_acc: 0.9508

Epoch 2/10

48000/48000 [==============================] - 3s 68us/step - loss: 0.2041 - acc: 0.9401 - val_loss: 0.1234 - val_acc: 0.9635

Epoch 3/10

48000/48000 [==============================] - 3s 67us/step - loss: 0.1534 - acc: 0.9544 - val_loss: 0.1181 - val_acc: 0.9665

Epoch 4/10

48000/48000 [==============================] - 3s 66us/step - loss: 0.1340 - acc: 0.9597 - val_loss: 0.0941 - val_acc: 0.9736

Epoch 5/10

48000/48000 [==============================] - 3s 60us/step - loss: 0.1173 - acc: 0.9657 - val_loss: 0.0973 - val_acc: 0.9720

Epoch 6/10

48000/48000 [==============================] - 3s 64us/step - loss: 0.1040 - acc: 0.9700 - val_loss: 0.0900 - val_acc: 0.9758

Epoch 7/10

48000/48000 [==============================] - 3s 61us/step - loss: 0.0973 - acc: 0.9728 - val_loss: 0.0926 - val_acc: 0.9754

Epoch 8/10

48000/48000 [==============================] - 3s 62us/step - loss: 0.0892 - acc: 0.9731 - val_loss: 0.0930 - val_acc: 0.9765

Epoch 9/10

48000/48000 [==============================] - 3s 62us/step - loss: 0.0849 - acc: 0.9754 - val_loss: 0.0975 - val_acc: 0.9757

Epoch 10/10

48000/48000 [==============================] - 3s 60us/step - loss: 0.0820 - acc: 0.9765 - val_loss: 0.0922 - val_acc: 0.9770 Evaluar el modelo # Evaluar el modelo

model %>% evaluate(x_test, y_test)

10000/10000 [==============================] - 0s 49us/step

$loss

[1] 0.07902947

$acc

[1] 0.9787 # Predecir resultados utilizando el modelo generado

model %>% predict_classes(x_test)

[1] 7 2 1 0 4 1 4 9 5 9 0 6 9 0 1 5 9 7 3 4 9 6 6 5 4 0 7 4 0 1 3 1 3 4 7 2 7 1 2 1 1 7 4 2 3 5 1 2 4 4 6 3 5 5 6 0 4 1 9 5 7 8 9 3 7 4 6 4 3 0 7 0 2 9 ....

Muy buena guía. Lo ideal es poder llevarlo a un contexto aplicable como el corporativo donde los DS nos toca trabajar en entornos Windows. En mi caso me trabo dado que keras necesita tensor y este necesita python 3.5- El último anaconda es 3.7 con lo cual se pierde la incompatibilidad

Con R Studio corre sin problemas, así te ahorras el uso de Python y la instalación de anaconda

Estimado quisiera saber si me puedes ayudar con una duda, tengo una red que realiza una predicción con Keras. En mi caso es un forecasting pero en los resultados tengo una novedad que pareciera que los datos predecidos están desfasados un período.