Spark Streaming definition

Apache Spark Streaming is an extension of the Spark core API, which responds to real-time data processing in a scalable, high-performance, fault-tolerant manner.

Apache Spark Streaming is an extension of the Spark core API, which responds to real-time data processing in a scalable, high-performance, fault-tolerant manner.

Spark Sreaming live was developed by the University of California at Berkeley, currently Databrinks which is responsible for supporting and making improvements.

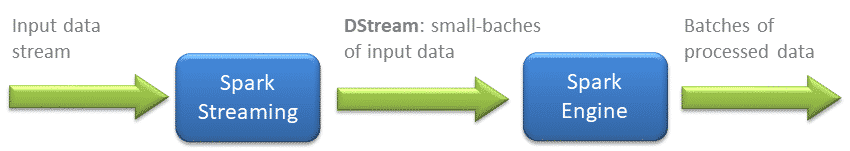

As a general idea it could be said that spark streaming takes a continuous flow of data, makes it a discrete flow called DStream, so that the core of spark can process it.

Spark streaming can ingest data from a multitude of sources, such as Kafka, Flume, RabbitMQ, Kinesis, ZeroMQ or TCP sockets.

Spark Engine (Core) processes data using a multitude of machine learning or graph algorithms and functions such as map, reduce, join and window. After processing the data is stored in file system, database to be presented in dashboards for example.

Dstream or discrete stream: it is nothing more than an abstraction provided by Spark streaming that represents a sequence of RDDs ordered in the time that each of them saves data from a particular range. With this abstraction it is possible that the core analyzes it without knowing that it is processing a data flow, since the work of creating and coordinating the RDDs is realized by Spark streaming.

Spark streaming makes Apache Spark the true successor to Hadoop as it provides the first framework that fully supports the lambda architecture, which simultaneously works with batch and streaming data processing.

Features

- Popular, matured and widely adopted technology.

- It has a large community of developers.

- Provides programming API for Java, Scala, Python, R, SQL.

- Provides libraries for Machine Learning and graphs.

- Allows custom memory management (such as Flink).

- Supports water marks.

- Event-time processing support.

- It is not real streaming so it is not ideal when looking for low latency (< 0,5 seg).

- Fault tolerant due to the nature of Mirco-batch.

- Allows a multitude of configuration parameters, so it increases its difficulty of use.

- It has no state by nature.

- In many advanced options it falls below Flink.

Source: Official website

0 Comments